LLMs can solve entirely new tasks in a zero-shot manner.

LLMs can solve entirely new tasks in a zero-shot manner.

A ubiquitous emerging ability is, just as the name itself suggests, that LLMs can perform entirely new tasks that they haven’t encountered in training, which is called zero-shot. All it takes is some instructions on how to solve the task.

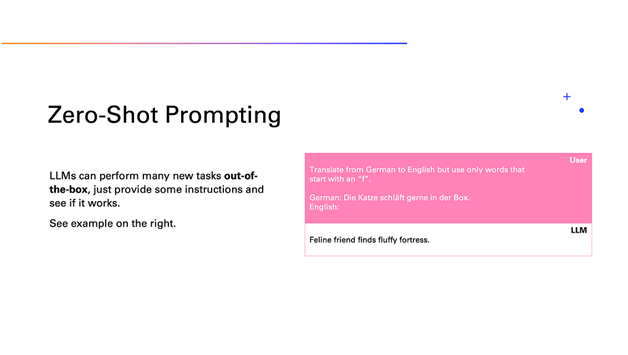

To illustrate this ability with a silly example, you can ask an LLM to translate a sentence from German to English while responding only with words that start with “f”.

For instance, when asked to translate a sentence using only words that start with “f”, an LLM translated “Die Katzeschläft gerne in der Box” (which is German and literally means “The cat likes to sleep in the box”) with “Feline friend finds fluffy fortress”, which is a pretty cool translation, I think.

LLMs, just like humans, can benefit from providing them with examples or demonstrations.

LLMs, just like humans, can benefit from providing them with examples or demonstrations.

For more complex tasks, you may quickly realize that zero-shot prompting often requires very detailed instructions, and even then, performance is often far from perfect.

To make another connection to human intelligence, if someone tells you to perform a new task, you would probably ask for some examples or demonstrations of how the task is performed. LLMs can benefit from the same.

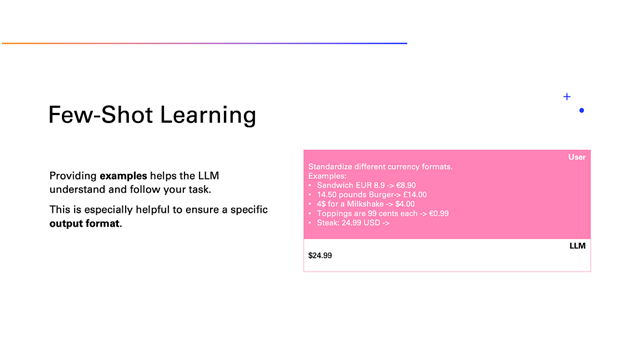

As an example, let’s say you want a model to translate different currency amounts into a common format. You could describe what you want in details or just give a brief instruction and some example demonstrations. The image above shows a sample task.

Using this prompt, the model should do well on the last example, which is “Steak: 24.99 USD”, and respond with $24.99.

Note how we simply left out the solution to the last example. Remember that an LLM is still a text-completer at heart, so keep a consistent structure. You should almost force the model to respond with just what you want, as we did in the example above.

To summarize, a general tip is to provide some examples if the LLM is struggling with the task in a zero-shot manner. You will find that often helps the LLM understand the task, making the performance typically better and more reliable.

Chain-of-thought provides LLMs a working memory, which can improve their performance substantially, especially on more complex tasks.

Chain-of-thought provides LLMs a working memory, which can improve their performance substantially, especially on more complex tasks.

Another interesting ability of LLMs is also reminiscent of human intelligence. It is especially useful if the task is more complex and requires multiple steps of reasoning to solve.

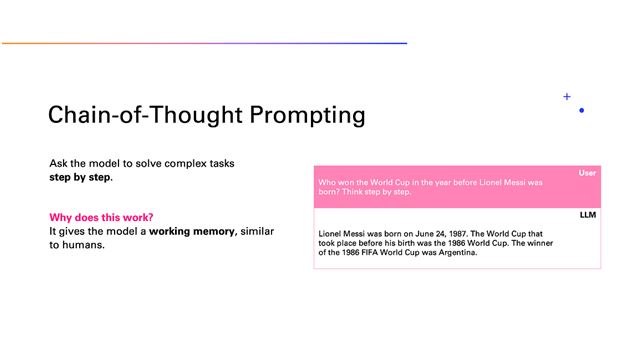

Let’s say I ask you “Who won the World Cup in the year before Lionel Messi was born?” What would you do? You would probably solve this step by step by writing down any intermediate solutions needed in order to arrive at the correct answer. And that’s exactly what LLMs can do too.

It has been found that simply telling an LLM to “think step by step” can increase its performance substantially in many tasks.

Why does this work? We know everything we need to answer this. The problem is that this kind of unusual composite knowledge is probably not directly in the LLM’s internal memory. However, all the individual facts might be, like Messi’s birthday, and the winners of various World Cups.

Allowing the LLM to build up to the final answer helps because it gives the model time to think out loud — a working memory so to say — and to solve the simpler sub-problems before giving the final answer.

The key here is to remember that everything to the left of a to-be-generated word is context that the model can rely on. So, as shown in the image above, by the time the model says “Argentina”, Messi’s birthday and the year of the Word Cup we inquired about are already in the LLM’s working memory, which makes it easier to answer correctly.